|

i3DPost Multi-view Human Action Datasets |

|

This is a webpage to distribute multi-view/3D human action/interaction datasets created in a cooperation between University of Surrey and CERTH-ITI within the i3DPost project. All details about the datasets are described in this paper: N. Gkalelis, H. Kim, A. Hilton, N. Nikolaidis and I. Pitas, "The i3DPost multi-view and 3D human action/interaction," Proc. CVMP, pp. 159-168, 2009 PDF In order to access the full datasets, please read this license agreement and send email Dr. Hansung Kim with the following information if you agree: Your name/affiliation, name/email of your supervisor (if you are a student) Access to the datasets (ID/PW required) |

|

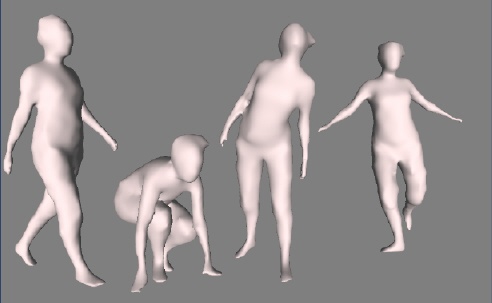

If you get a permission to access, the following datasets are provided. - Multi-view datasets - Synchronised/uncompressed-HD 8 view image sequences of 13 actions of 8 people (104 total) - Sample videos Actors/Actresses: Man1, Woman1, Man2, Man3, Woman2, Man4, Man5, Man6, Actions: Walk, Run, Jump, Bend, Hand-wave, Jump-in-place, Sit-StandUp, Run-fall, Walk-sit, Run-jump-walk, Handshake, Pull, Facial-expressions - Background images for matting - Camera calibration parameters (intrinsic and extrinsic) for 3D reconstruction - 3D mesh models - All frames and actions of above datasets in ntri ascii format The models were reconstructed using a global optimisation method: J. Starck and A. Hilton, “Surface capture for performance based animation,” IEEE Comp. Graph. Appl., 27(3), pp.21–31, 2007. PDF |

|

Centre for Vision, Speech and Signal Processing, University Of Surrey, Guildford, UK Artificial Intelligence & Information Analysis Lab, CERTH-ITI, Thessaloniki, GREECE i3DPost, EU Project under Framework 7 ICT Programme To contact us: Dr. Hansung Kim ([email protected]) |